This is the first blog post in the series “Revolutionizing Sentiment Analysis with GPT-4”. As its name would imply, this series dives into how we’ve pushed the bleeding edge of what’s possible with GPT-4 to create an AI that transforms the previously manual process of qualitative data analysis.

Our goal at Viable is to create generalizable AI data analysis that exceeds human performance.

Most of our customers are large companies that receive hundreds of thousands of pieces of feedback data per year. This data comes in various formats, about a wide array of products. What we receive can be in the form of product reviews, support chats with both human representatives and bots, conference call transcripts, help disk tickets, survey responses, social media threads, email, Slack chats, and almost anything else you can imagine in text.

At the heart of this task are three core challenges:

- Going beyond simple summarization and generating true analysis that exemplifies creative, reliable, and deeply perceptive reasoning over multidimensional data. It should qualitatively assess both text (like app reviews) and numerical data (like user statistics) in a unified manner.

- The computational limits of large language models by joining multiple stages of analysis into a pipeline of models.

- The contextual scaling limits of large language models by preprocessing vast amounts of raw data into logical, high-signal buckets.

To face these challenges, Viable uses a pipeline that can be described in five steps:

- All incoming data from a client company is classified as either signal or noise.

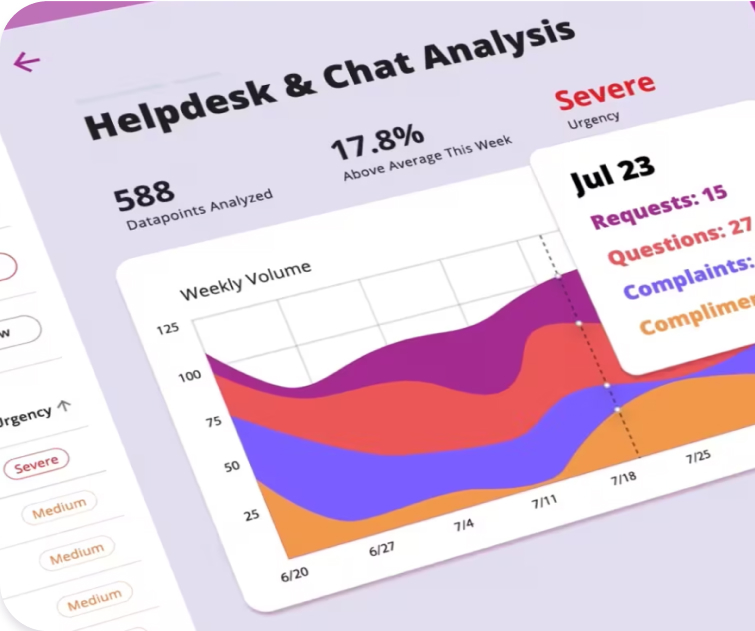

- The signal data is run through a generative Davinci that assigns each data point a sheet of simple analysis. This sheet divides the datapoint into topic subdivisions, and tags each subdivision as a complaint, compliment, question, or request. Or if the data is from a survey, it organizes responses by question instead.

- These enriched data points are then embedded by Curie Embeddings. The embeddings are then clustered into extremely tight topical clusters.

- Next, clustered texts are combined with numerical user statistics, company information, and enrichment data to form a composite multidimensional prompt.

- The composite prompt is then analyzed by a generative Davinci that outputs a sheet of complex analysis. This output is a report that assesses the dominant theme of the cluster, the urgency of the cluster, the personal backgrounds of the individuals represented in the texts, and the scope of topic variation across the data points within the cluster. The output is thus a mini-report on the cluster level, that can be chained together to create an encyclopedia of analysis about a data source.

.png)

Boost customer satisfaction with precise insights

Surface the most urgent topics by telling our AI what matters to you.

Just as the pipeline can be summarized into five steps, so too can the vision for how this technology improves. In the fall of 2021, I wrote a plan for a gradual escalation of Viable’s ML capabilities, inspired by the five levels of Tesla’s Full Self Driving. We are now at step 3 of 5.

- Simple Unenriched Summarization: This is where we started in mid-2021. Raw data was embedded and clustered in its raw state. A sample of a cluster was handed to Davinci to generate a 100-word blurb that summarized the topic of that cluster.

- Enriched summarization: To make better clusters, I trained a proprietary iteration of Davinci. Instead of clustering and summarizing raw texts, we clustered enriched texts. The result was far tighter clusters and composite inputs of company data and sampled texts presented for summarization into 100-word blurbs.

- Multidimensional Analysis: This is the current epoch of performance that began in the spring of 2022. To truly set us apart, I knew we had to transcend summarization and produce something deeper. This meant cranking up the dimensionality of generated text as far as I could get away with. The result was the highly complex composite input and output. We can now assess both texts and statistics, into specialized “slots” of Theme, Urgency, User, and Scope.

- Temporal Analysis and Cluster Meta-Analysis: What comes next? Right now a Theme-Level Mental Model can only assess a single cluster at a set start and end date. I want the next level of analysis to be able to not just look down at what’s in front of it, but forward and backward and side to side. One such model should understand how a cluster is changing over time and how a cluster relates to every other cluster. Several of the experiments we will showcase in this series show these next steps coming together.

- An Interactive Active-Learning Multi-Modal Analyst Agent: How good can it get? The ideal system of analysis should be able to reach out and retrieve information relevant to the work in real time. This means scouring the internet, searching internal corpera, and asking questions of its users. It should be able to take this scavenged knowledge and use it dynamically to improve its output via re-prompting. It should be able to correct its own mistakes and provide deep interpretability. And of course: why should it be limited to text?

This escalating ladder of sophistication has served as a critical organizing force at Viable. We are seeing dramatic growth now that we have hit Level 3 and anticipate rapid acceleration as we advance to Levels 4 and 5. Check out Part 2 of the series, where we dive into how we fine-tuned our AI to overcome the three challenges outlined at the beginning of this blog post and reliably analyze clusters of multi-format text to deliver insightful natural-language reports.

Curious to see what our AI can do with your customer feedback?

Try it free

About Sebastian

Sebastian Barbera is Viable’s Head of AI. Sebastian is an autodidact, having taught himself machine learning engineering and AI. He is an inventor with multiple software and hardware patents, and helped build Viable’s AI from Simple Unenriched Summarization to Multidimensional Analysis in a span of 17 months. Prior to Viable, Sebastian founded and ran his own company. He is based in New York City.

%20(10).png)

%20(9).png)

%20(8).png)