.png)

GPT-3 achieves a new state of the art on clustering tasks, dramatically overtaking the competition.

Our goal at Viable is to create the world’s first fully autonomous customer data analyst. With our feedback reports system, we take tens of thousands of pieces of qualitative data from customer feedback sources and turn them into an actionable natural language report, in seconds. To accomplish this, we leverage OpenAI’s GPT-3 end-to-end.

At the foundation of our feedback reports pipeline is an unsupervised clustering system powered by GPT-3's vector embeddings. When clustered, GPT-3’s embeddings significantly outperform the competition.

An embedding is an encoded representation of a piece of text, that maps the meaning of the text into an array of numbers. Here’s an example for the phrase “AI is the future":

[-0.0014679825399070978, -0.004783313721418381,

0.009507982060313225, -0.005366108380258083,

0.00782190915197134, 0.01914791762828827,

0.011384653858840466, 0.009845196269452572…

With these encoded representations we are able to perform measurements and transformations on the customer feedback text. By mapping the encoded distance between the representations, we can group them into clusters. The themes we surface in our feedback reports emerge from such clusters.

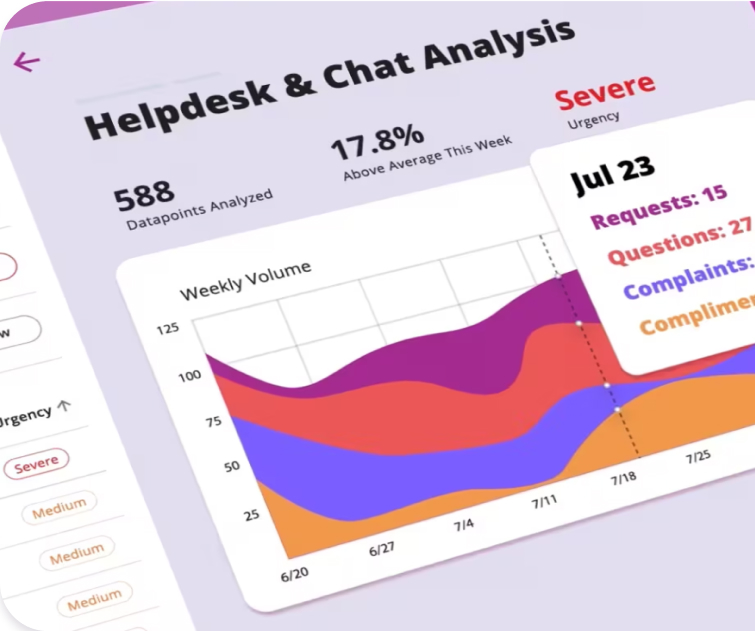

Boost customer satisfaction with precise insights

Surface the most urgent topics by telling our AI what matters to you.

OpenAI’s Embedding endpoint is a special version of GPT-3, trained to facilitate tasks like clustering. In late 2021 we were one of the first companies given access to it. It sets a new state of the art for embedding performance.

Most machine learning models for generating embeddings are small. The previous generation of state of the art models like Sentence Transformers all-roberta-large-v1, are less than 2 gigabytes. The version of GPT-3 we use in production “Curie” is over 20 gigabytes. All this extra scale is brought to bear to create embeddings that are richer, and make for better maps of meaning.

To test GPT-3’s performance, we developed a simple benchmarking approach.

We take samples from customer feedback data, and build small hand crafted datasets. We use our human understanding of good data clustering to act as a baseline for the AI. Below is a sample of such a human-made cluster of freely available app review data.

This particular data for Best Buy, the electronics retailer, comes from app reviews. It breaks down into 11 clusters. There’s one very large positive cluster, one medium sized negative cluster, and nine tiny clusters addressing very specific issues with the app. The goal of a good clustering is to capture all the unique and actionable topics, without artificially separating related texts, or duplicating the same topic in two different clusters.

The first step to getting an AI system to imitate this clustering philosophy is to select a clustering algorithm. The one we use in production is the Sentence Transformer “Fast clustering” algorithm. We use it for two reasons: first, as the name suggests, it’s fast and efficient; second, it allows for an infinite number of clusters of variable size, including clusters of one. We believe this highly organic and uneven clustering shape best imitates the human gold standard.

A clustering algorithm is not a machine learning model like GPT-3, rather it’s a simple piece of software that reads off the embeddings (maps of meaning) produced by models like GPT-3. By measuring the “distance” between the embeddings of different texts, the algorithm is able to assemble a cluster structure.

Distance thresholds can be set to produce a cluster structure with a few big general clusters, or many small specific clusters. Embeddings behave differently with different thresholds; similar to how some maps of the world may be at different scales and require different calculations to measure relative distance.

To compare GPT-3’s embeddings to Sentence Transformer’s own Roberta model, we use an “adjusted rand” metric. This is another algorithm that is able to judge the similarities and differences between arrangements of information.

The machine-built clusters are compared to the human-built clusters, and the result is a fractional number like 0.56. The higher the number, the more accurately the machine-built clusters imitate the human gold standard. To make the comparison fair, we test Roberta and GPT-3 at 10,000 different distance thresholds, to make sure we see the best of both.

Automated metrics like fractional rand are essential when running thousands of sequential tests. The best rand values consistently predict the best clusters (100% predictive in our case), but the rand values cannot perfectly capture the relative severity of mistakes made by the models. As you’ll see, the difference in quality might actually be understated by the difference in rand scores.

After 10,000 different attempts, Sentence Transformers Roberta’s best performance is a rand score of 0.8789. The resulting cluster structure looks like this:

You can see it successfully captures the largest structures, the first and second clusters. But it misclassified over 20% of the texts, mixing some negative texts in with what should be the positive cluster, and several positive texts in what should be the negative cluster.

This much mixing would undermine faith in the analysis of one of our report themes.

Now let's compare that performance to GPT-3.

After 10,000 runs, GPT-3’s best rand score is a 0.956. That's a seven point lead over Roberta. Let’s look at the clusters.

We can see that GPT-3 only makes a small handful of mistakes. The mistakes it does make are also more subtle than Roberta, no strongly negative, or strongly positive texts are in the wrong clusters.

The upshot of using GPT-3 is a structure of clusters that are more trustworthy, and a much better fit to the human interpretation of the data.

Good clustering is the foundation of Viable’s reports system. With clusters that make sense, our other GPT-3 models—our “analysts”—can look at the data in the clusters and synthesize the nascent trends and implications of the texts. If a cluster is jumbled, the AI analysts have no hope of arriving at reports that make sense.

Errors stack—this is the unavoidable reality of any complex technological system. To strengthen the performance of our AI, we’re driven to step beyond the cutting edge and bring massive models like GPT-3 to bear on the problem. We believe that greater complexity and high demands for truth necessitate risk and experimentation. For Viable, this justifies the cost of the GPT-3 API. GPT-3 is the first model to produce clusterable embeddings that come within reach of an expert human baseline.

If you’re interested in learning more about GPT-3’s embeddings you can read OpenAI's official blog post and accompanying paper at https://openai.com/blog/introducing-text-and-code-embeddings/

%20(10).png)

%20(9).png)

%20(8).png)